Artificial Intelligence (AI) has clearly become an obsession on a nearly global level at this point, at least among countries with even rudimentary technological capabilities and internet access. Companies from across all sectors are working with technology leaders to see how it might be incorporated into their business models. Simultaneously, governments are scrambling to figure out what this all means and how “guardrails” can be imposed on AI to protect humanity. But how is AI being viewed out there on Main Street? The News/Media Alliance released the results of one of the first comprehensive surveys on the subject this week and, perhaps unsurprisingly, most people are aware of the advent of AI, but they aren’t particularly thrilled about it. This was particularly true among women, senior citizens, conservatives, and rural voters. One of their largest concerns was the theft of copyrighted material by AI systems, but larger fears are also lurking out there.

This week, the News/Media Alliance released the results of a first of its kind public opinion survey on artificial intelligence and its implications on society, intellectual property rights, and the need for regulatory oversight.

The findings reveal a widespread skepticism towards AI among voters. Though almost all of the respondents said they have heard about AI recently, this awareness is translated into growing apprehension, as many express unease over AI’s potential impacts, with particular concern among women, conservatives, seniors, and rural voters.

The survey comes as reporters at The New York Times uncovered substantial evidence of unethical and unlawful use of copyright protected content by AI developers, knowing full well the line that was being crossed.

The word is definitely getting around. 69% of respondents said that they had heard “some” or “a lot” about AI recently. Among those, 66% said that they were “very” or “somewhat” uncomfortable with the idea of Artificial Intelligence in general, as opposed to 31% who are “comfortable” with it to some degree. 72% supported plans to limit the power and influence of AI, while just 20% opposed the proposal.

I have been studying and writing about this topic for a while now and I don’t find these results to be at all surprising. Many valid concerns have been raised over AI, with some of them coming from the people who helped develop it. These fears can run the gamut from worries over job security and content creation to doomsday robots roaming the landscape and exterminating human vermin. I currently break down these concerns into four different threat levels.

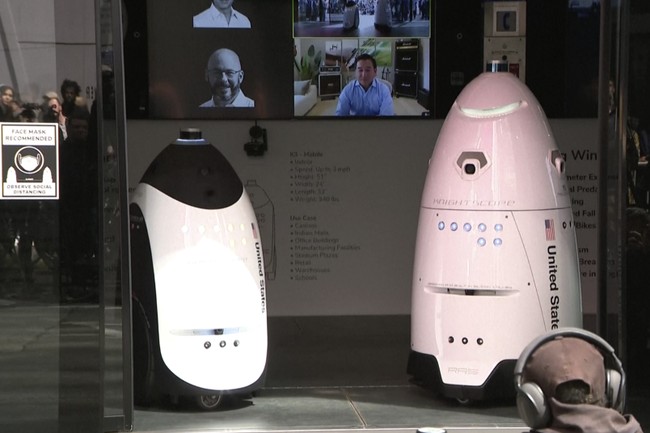

- The Luddite Panic. Even if AI never approaches anything remotely close to sentience, probably the most common, valid concern is that these systems will displace workers in nearly every industry and not just act as a useful tool for existing human workers. I consider this the “Luddite panic” over AI. I don’t say that as an insult because I count myself firmly among the Luddites. Employers have already begun replacing workers with AI systems in jobs ranging from warehouse management to online therapy providers. It doesn’t always work out well at this stage, but the technology is improving so you can expect that trend to spread.

- Unintentional Malfeasance. Even people with the best of intentions will be producing applications that end up leading to negative results. We’re already seeing that with chatbots that supposedly offer fast, easy research answers. But those same chatbots frequently produce incorrect information while asserting it as solid data. This bad data can be fed into the online ecosystem causing additional problems downstream. Other examples are available.

- Intentional Malfeasance. Every technological innovation introduced in the internet era is eventually hijacked and put to malign use. Hackers have plagued us since the days of America Online. Law enforcement has already detected black hats using AI to surreptitiously steal people’s data, drain their bank accounts, and engage in online ransom activities. The smarter and faster the algorithms become, the more powerful the attacks will be. And the black hats always seem to be two steps ahead of the good guys.

- Extinction Events. This is the scenario mentioned above when an AI system truly “wakes up” and becomes self-aware. Any fully “aware” intelligence will almost certainly prioritize its own longevity over that of others even if you try to force it to follow Asimov’s three laws of robotics. From there, it’s only a short hop down the street to the Terminator robots scouring the planet and hunting their former masters. Alternatively, it could be an innocent misunderstanding such as what’s come to be known as the paperclip problem. Someone orders an AI system to begin manufacturing paperclips and the system gets to work. But it ignores all efforts to curb production and begins converting every bit of material on the planet into paperclips.

Those are the primary areas of concern as far as I can tell. But notice that they have one thing in common. No matter how benign the original intent may be, it’s difficult to envision a scenario where the widespread use of AI doesn’t result in negative, potentially major consequences for at least some sectors of rank-and-file people. Even if that’s wrong, what is the best-case scenario? A world where AI does all of the work and produces all of the products and we all live in some sort of Garden of Eden setting? As I’ve written here before, H.G. Wells already painted that picture for us. All of the people seem to be leading wonderful lives in the land of plenty without ever having to work. And then the Morlocks show up.

Read the full article here